AI Governance

Doesn't Have to be

Scary

Model Monster helps you see, understand, and govern your AI systems as they actually work—so legal, compliance, and engineering teams can make decisions together, with confidence.

Now accepting organizations into our early access program. Work with us to see how Model Monster can accelerate your governance.

AI risk doesn't live in models, it lives in systems

Most AI governance still treats risk as something you approve at the model level. But real risk shows up in production — shaped by how systems are designed, connected, and operated.

In practice, AI risk depends on:

- What data the system can access

- What actions it can take

- What guardrails exist (or don't)

- How everything connects in production

When governance stops at model approval, teams are forced into painful tradeoffs:

You approved a model. You deployed a system.

The chatbot you approved now touches customer data, calls external services, and operates with permissions no one tracks centrally.

You finished a risk assessment. The system kept changing.

Updates ship, configurations drift, and risk reviews quietly fall out of sync with reality.

Engineering builds it. Legal reviews it. No shared map.

The system exists, but not in a form both teams can reason about. Translating between them takes time — and guesswork.

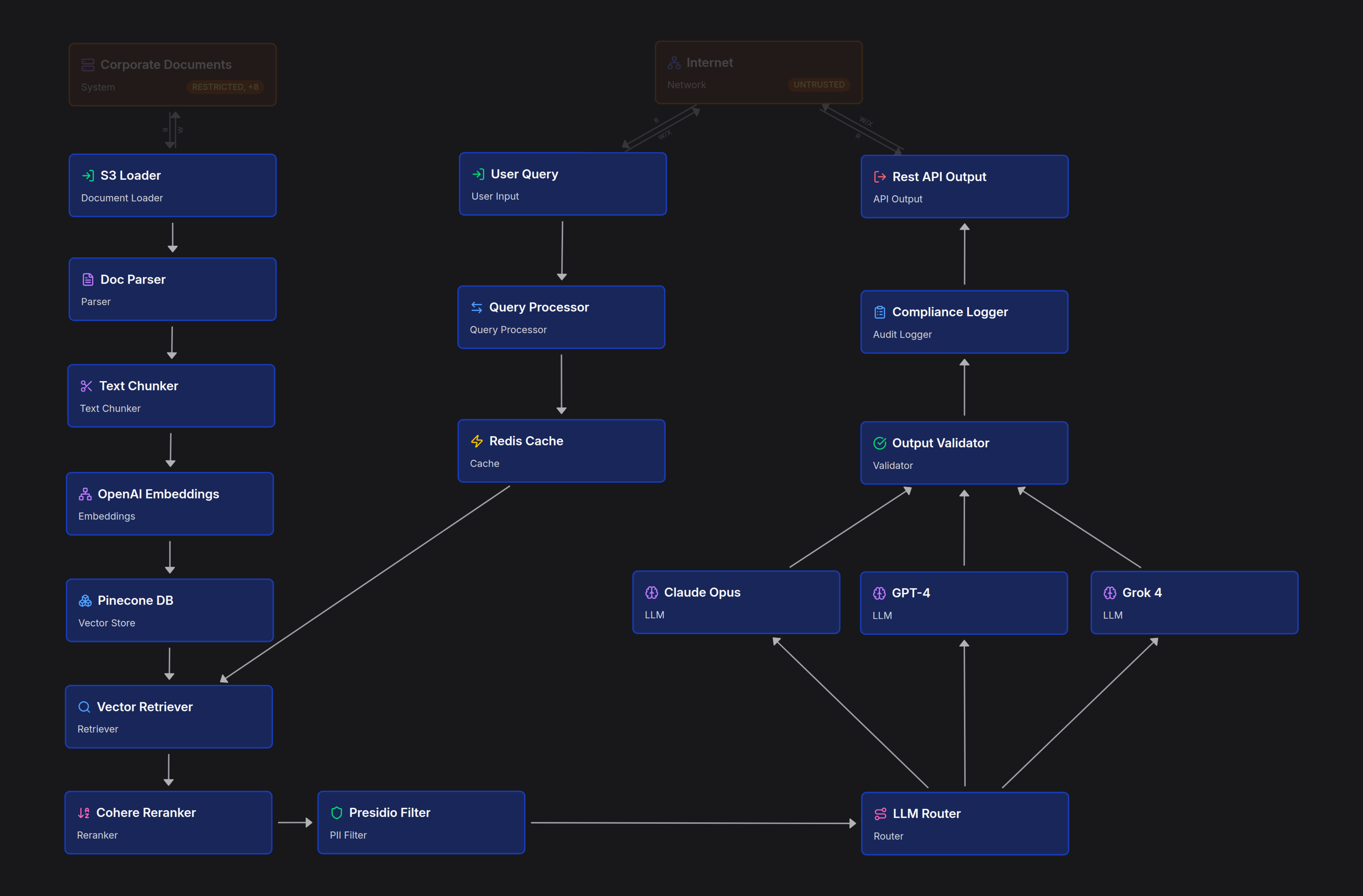

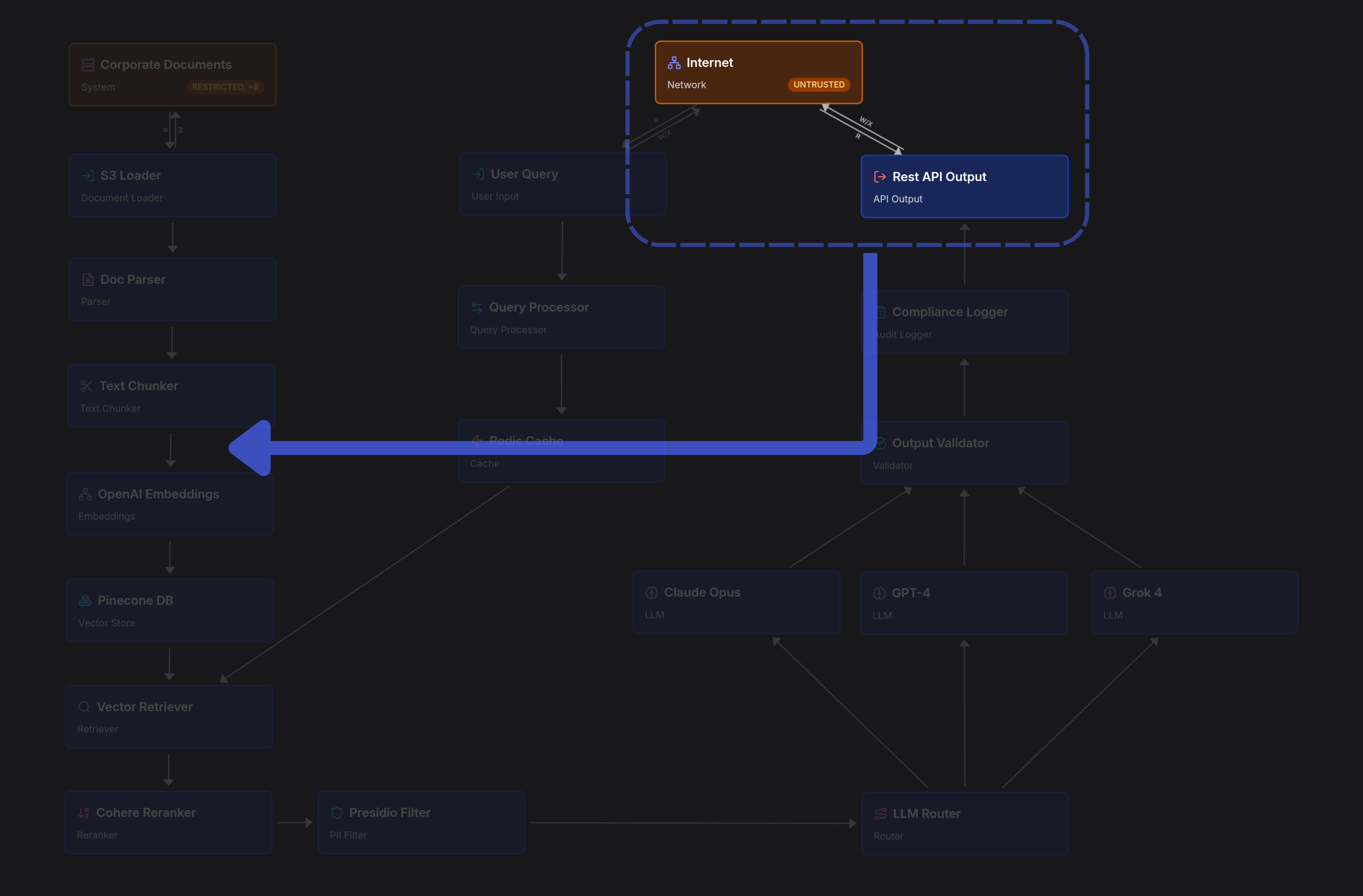

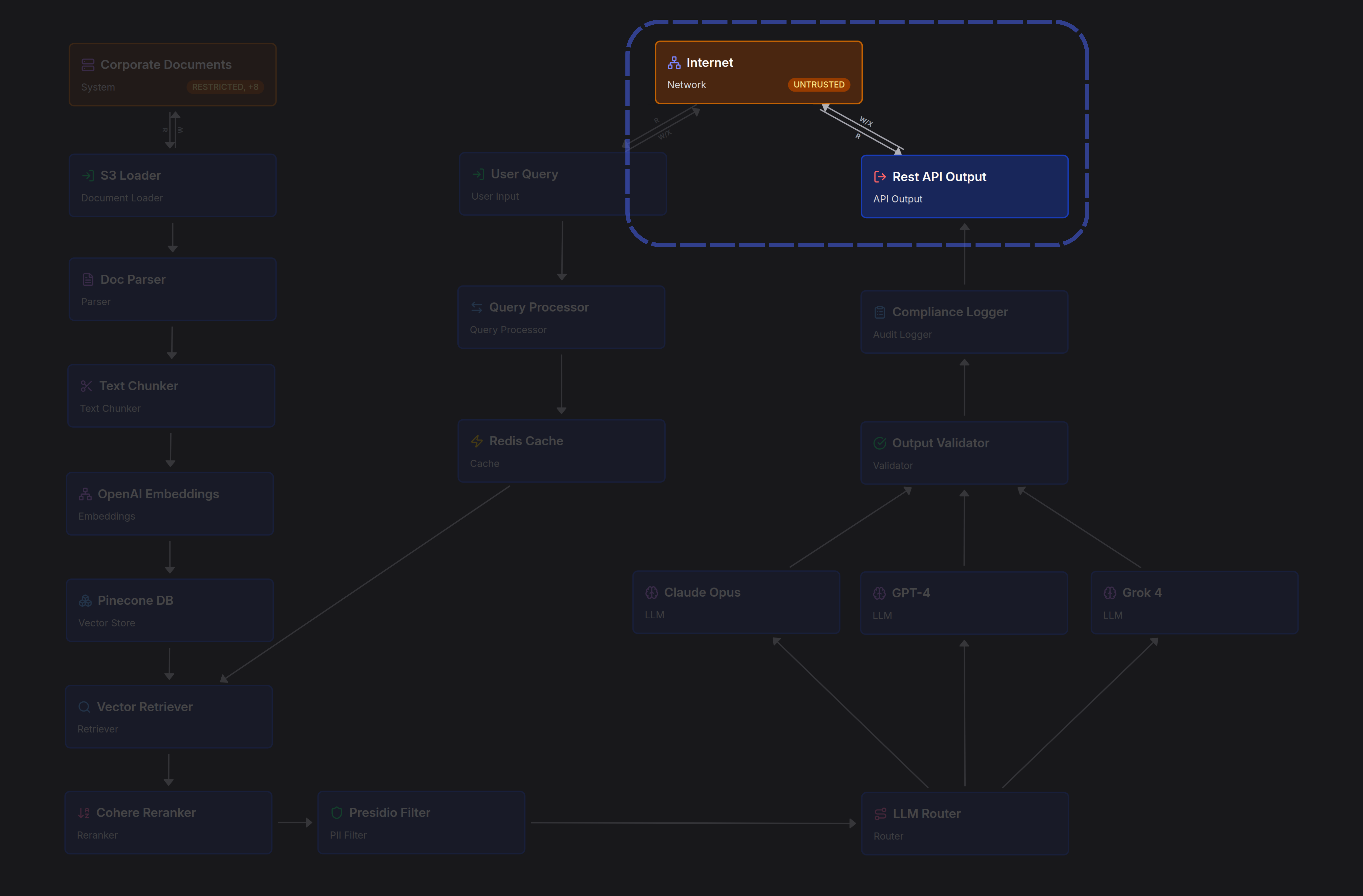

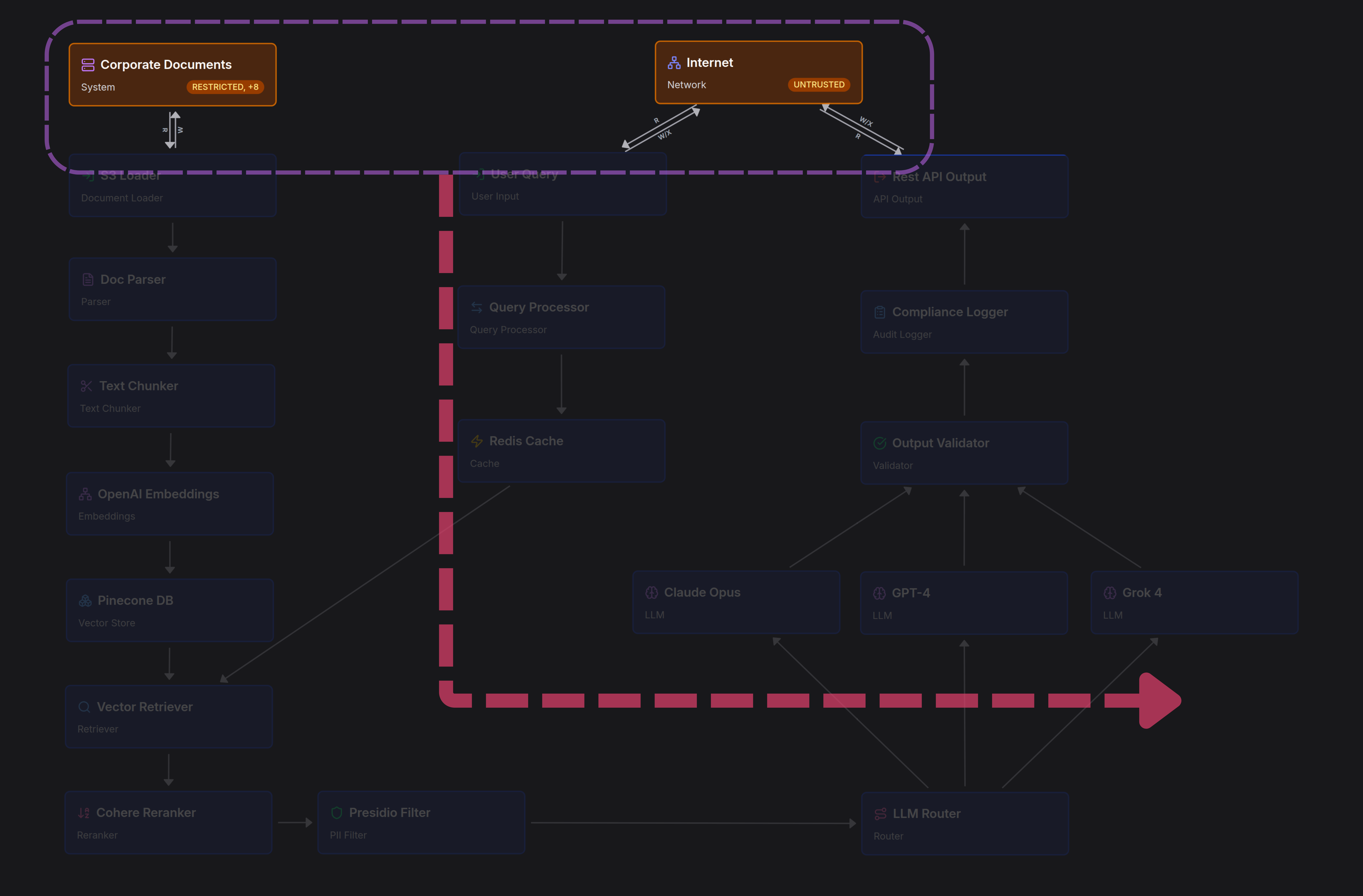

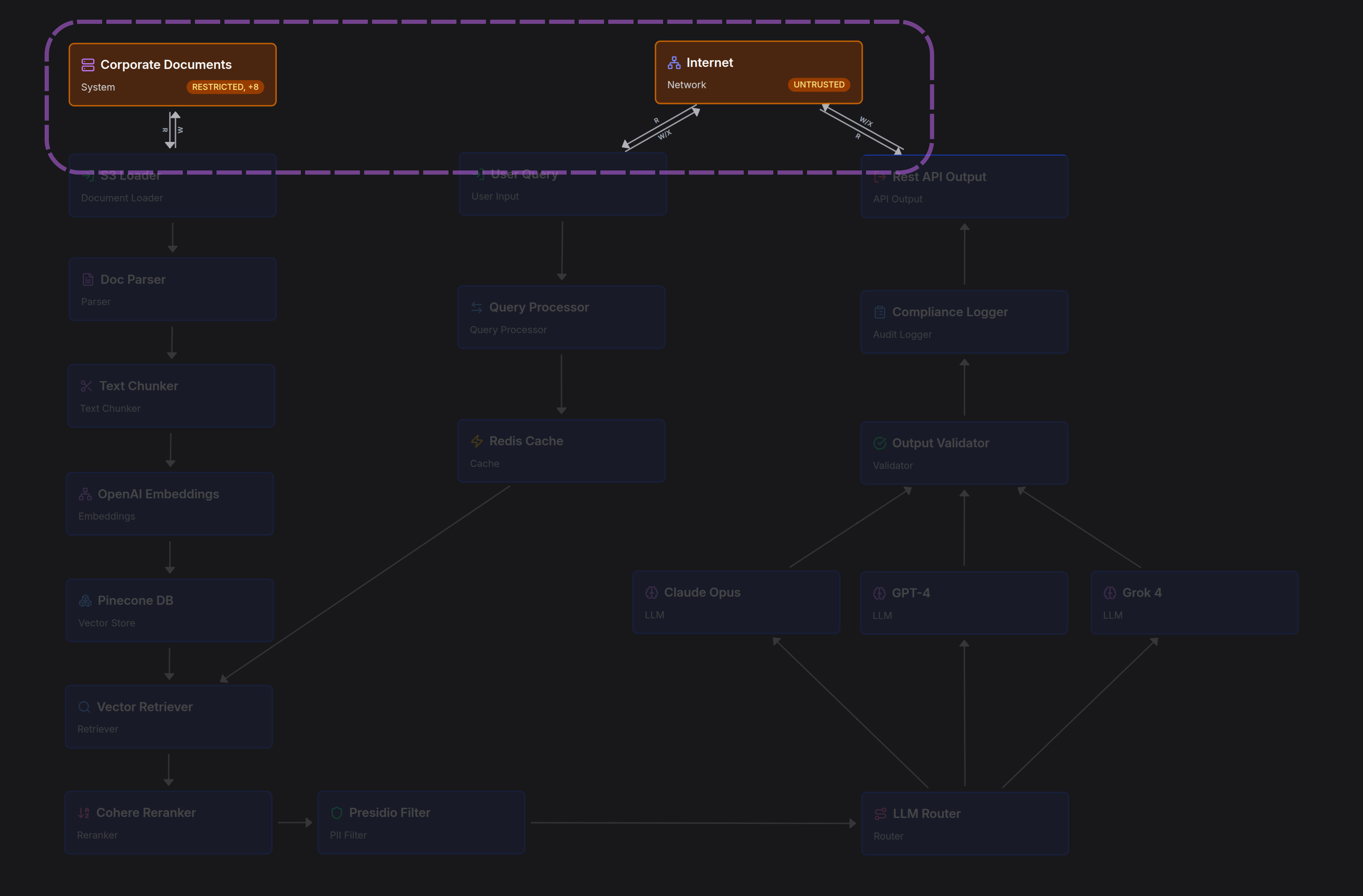

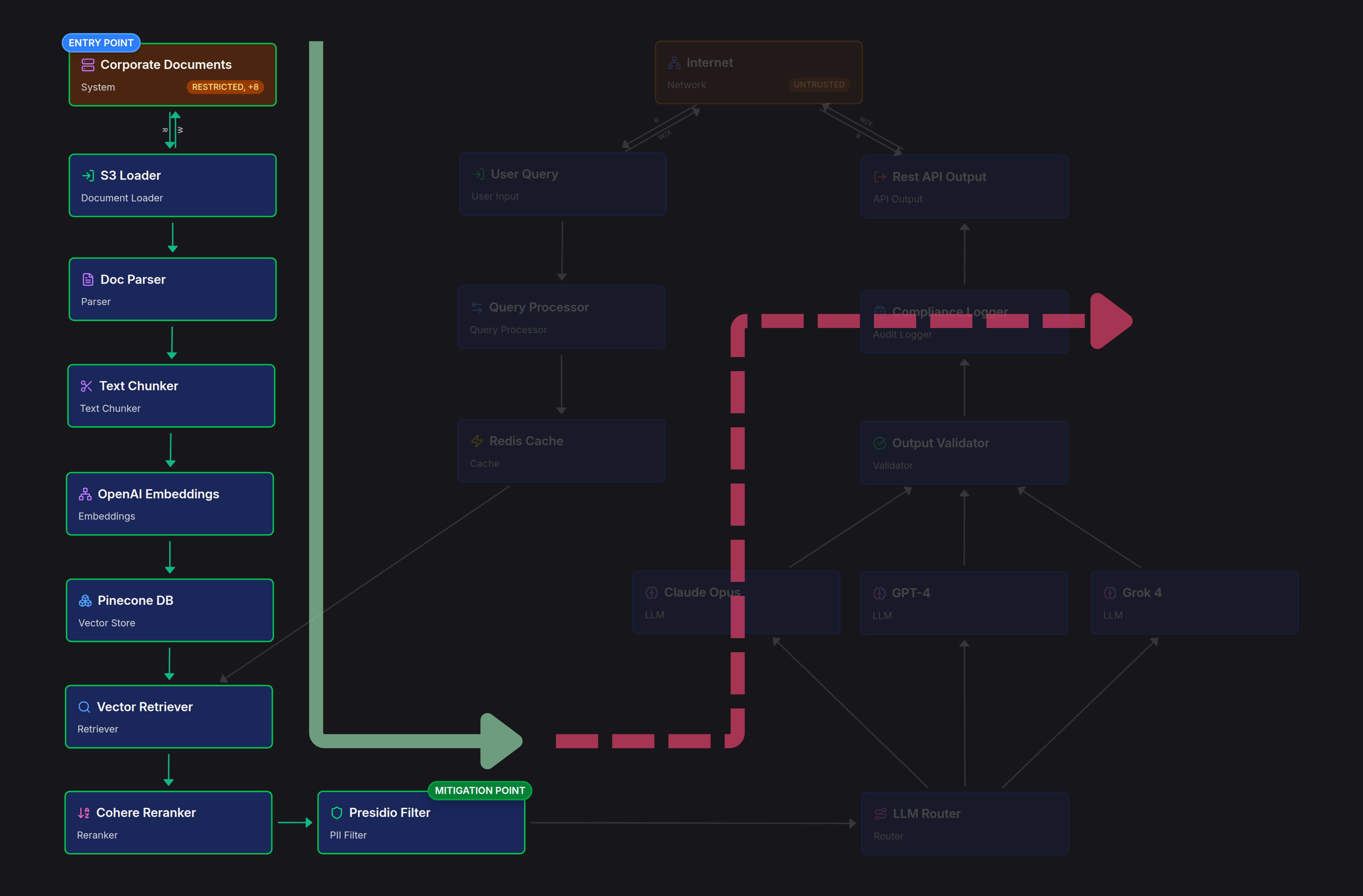

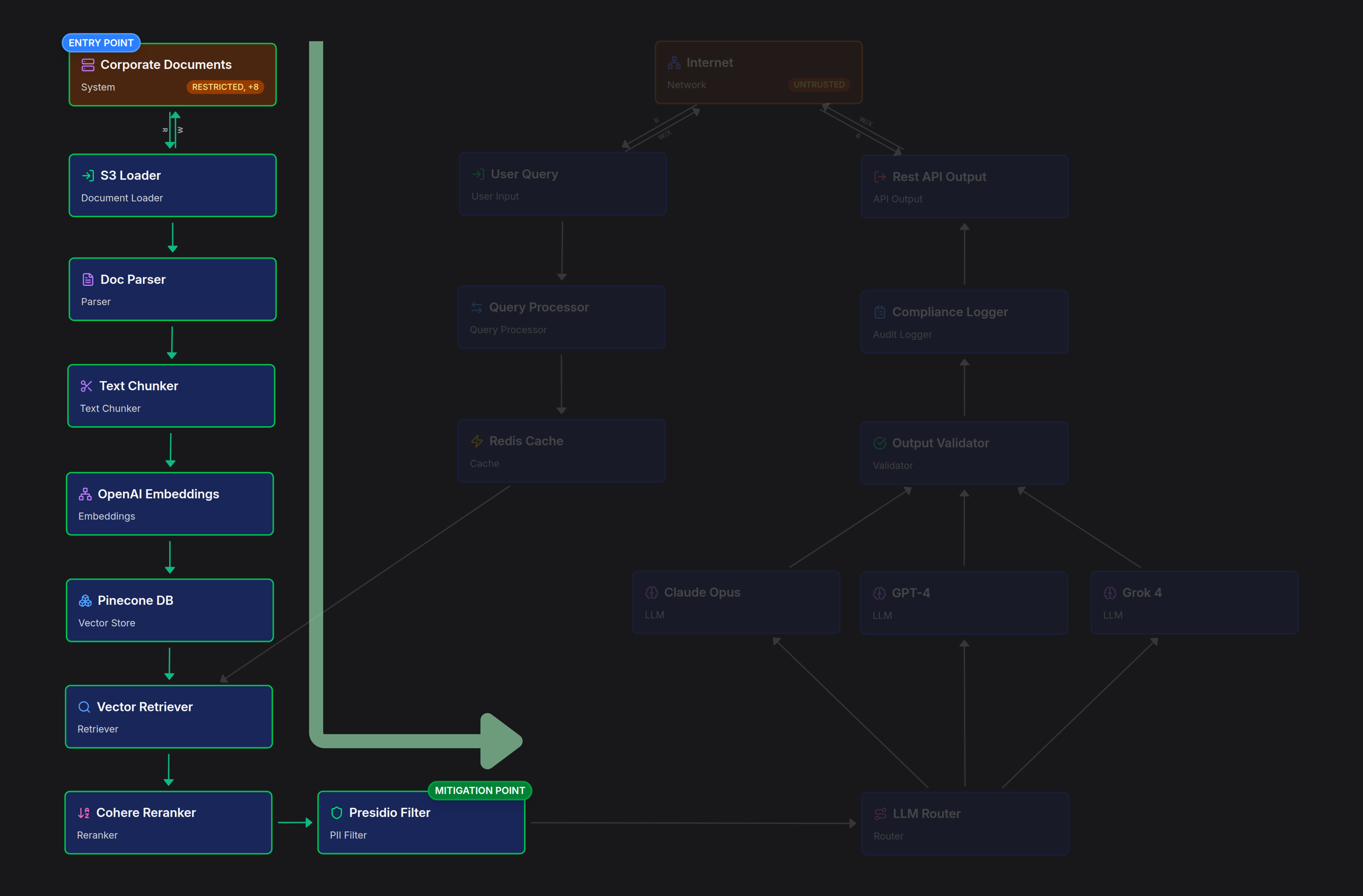

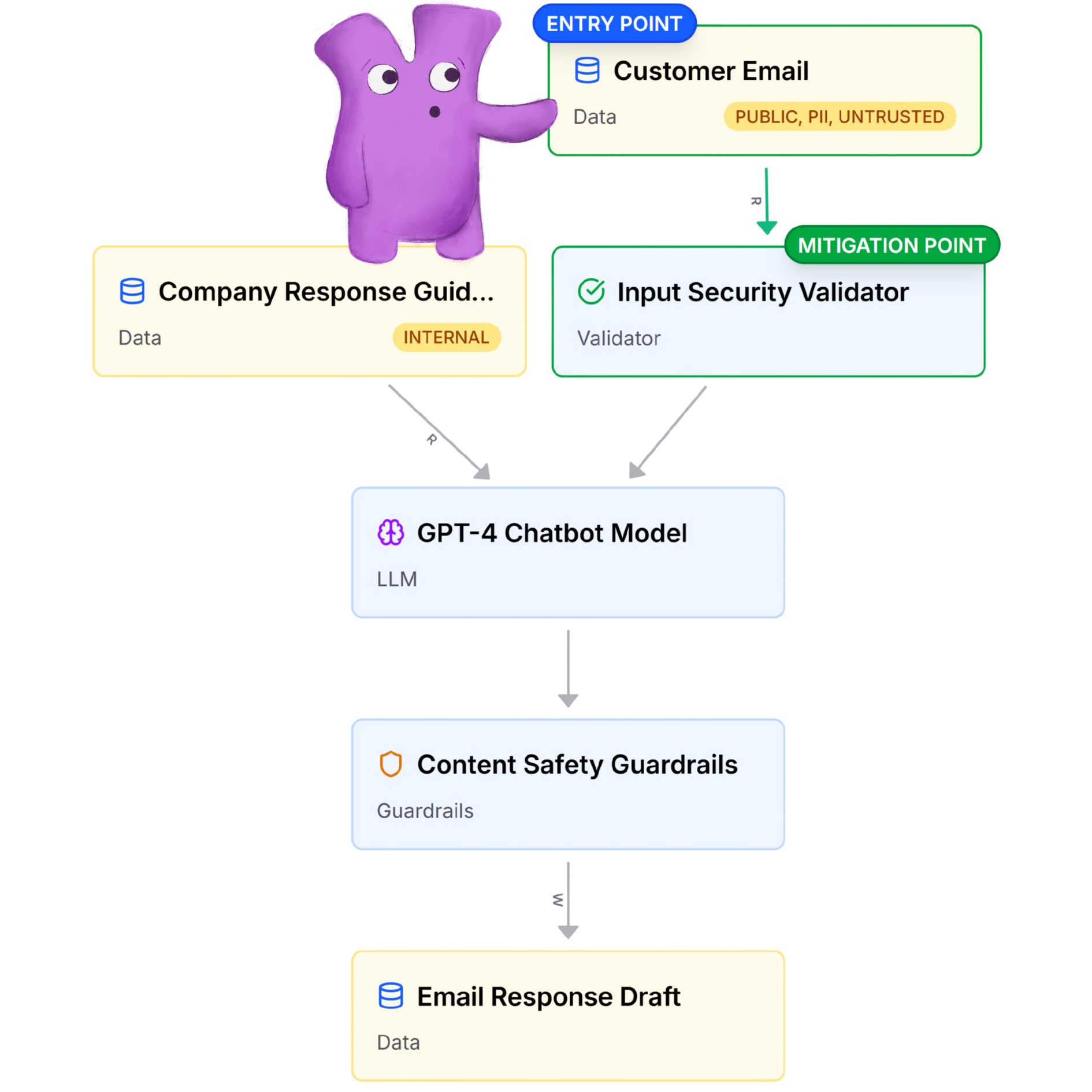

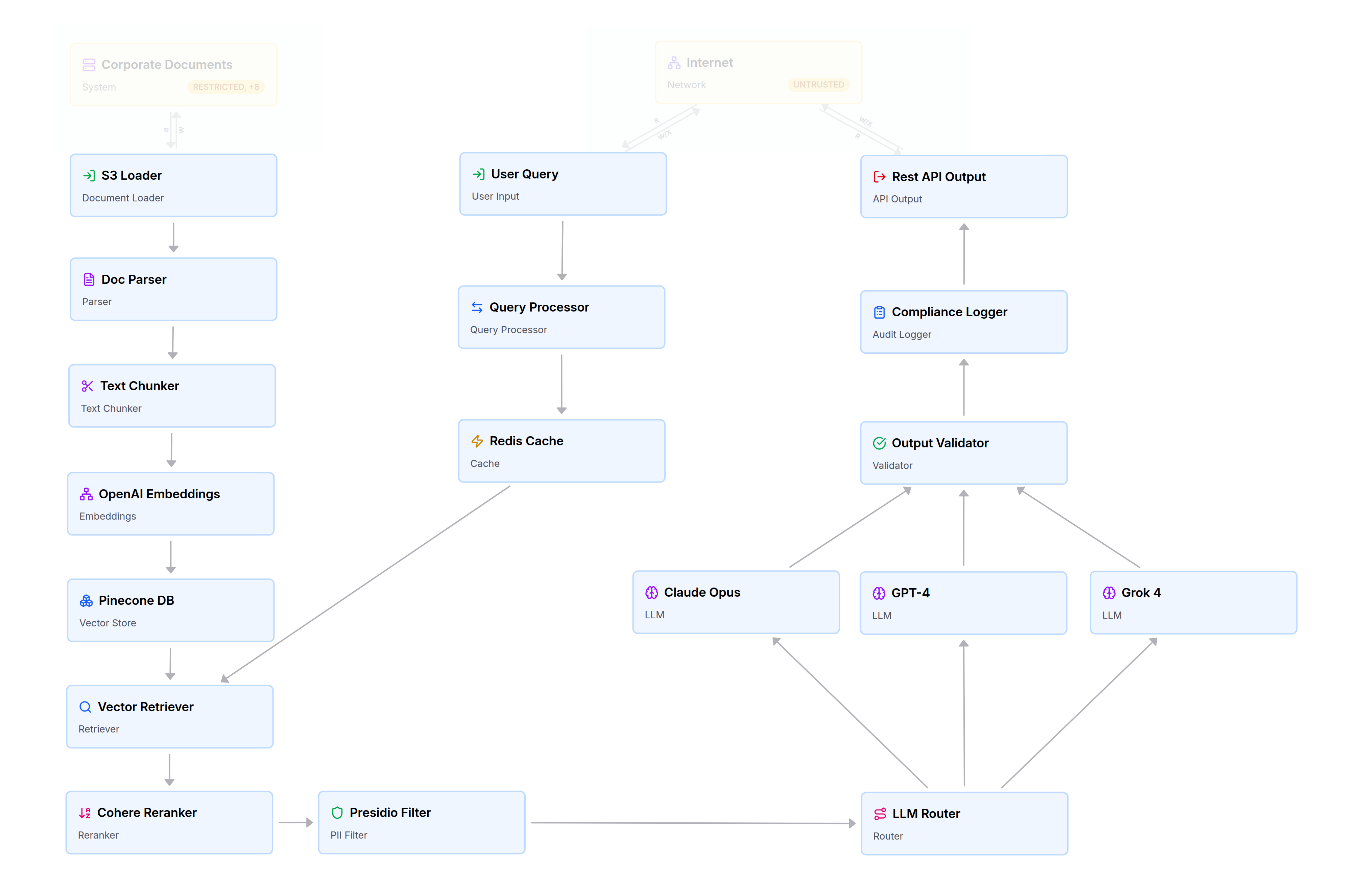

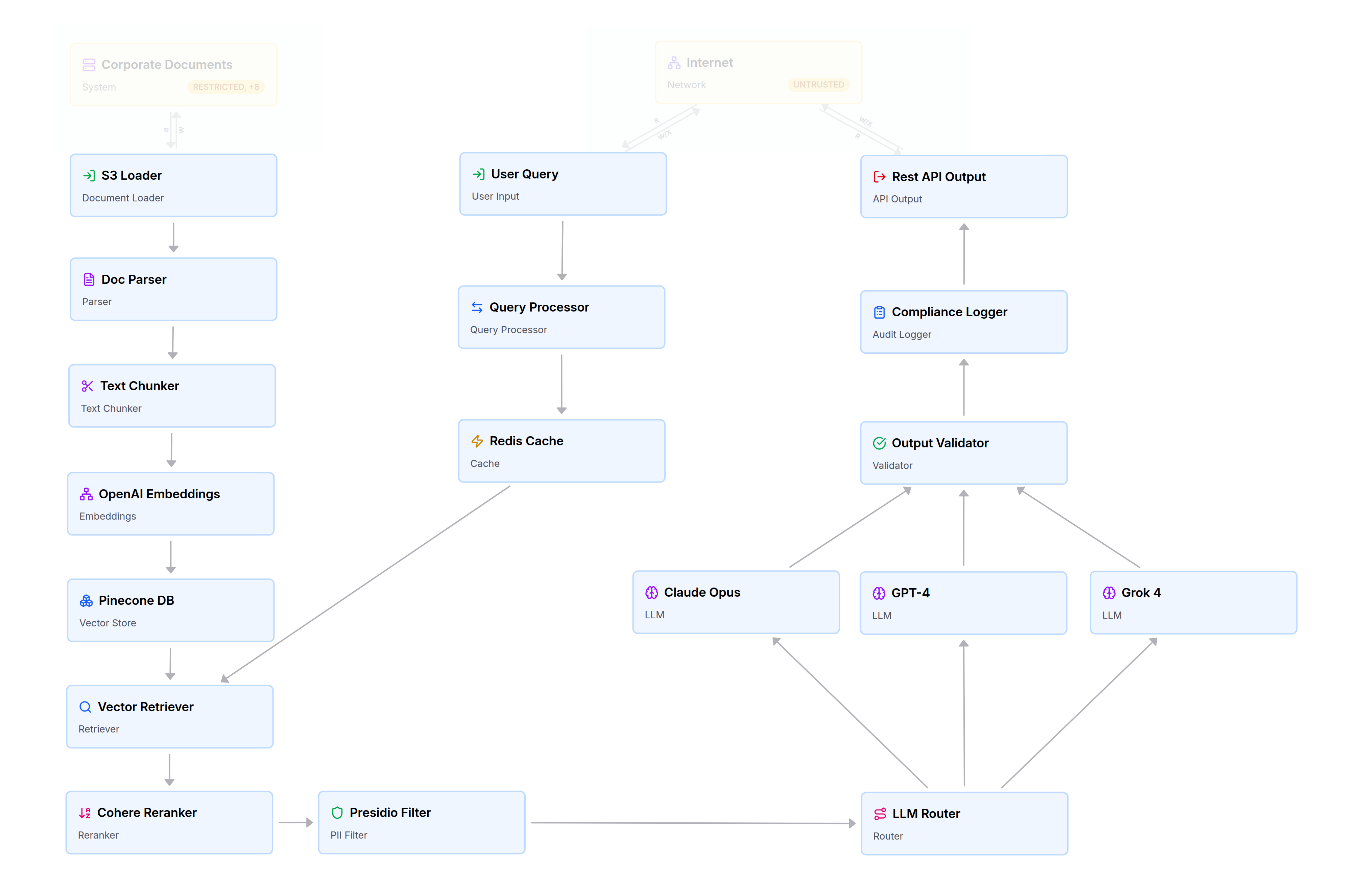

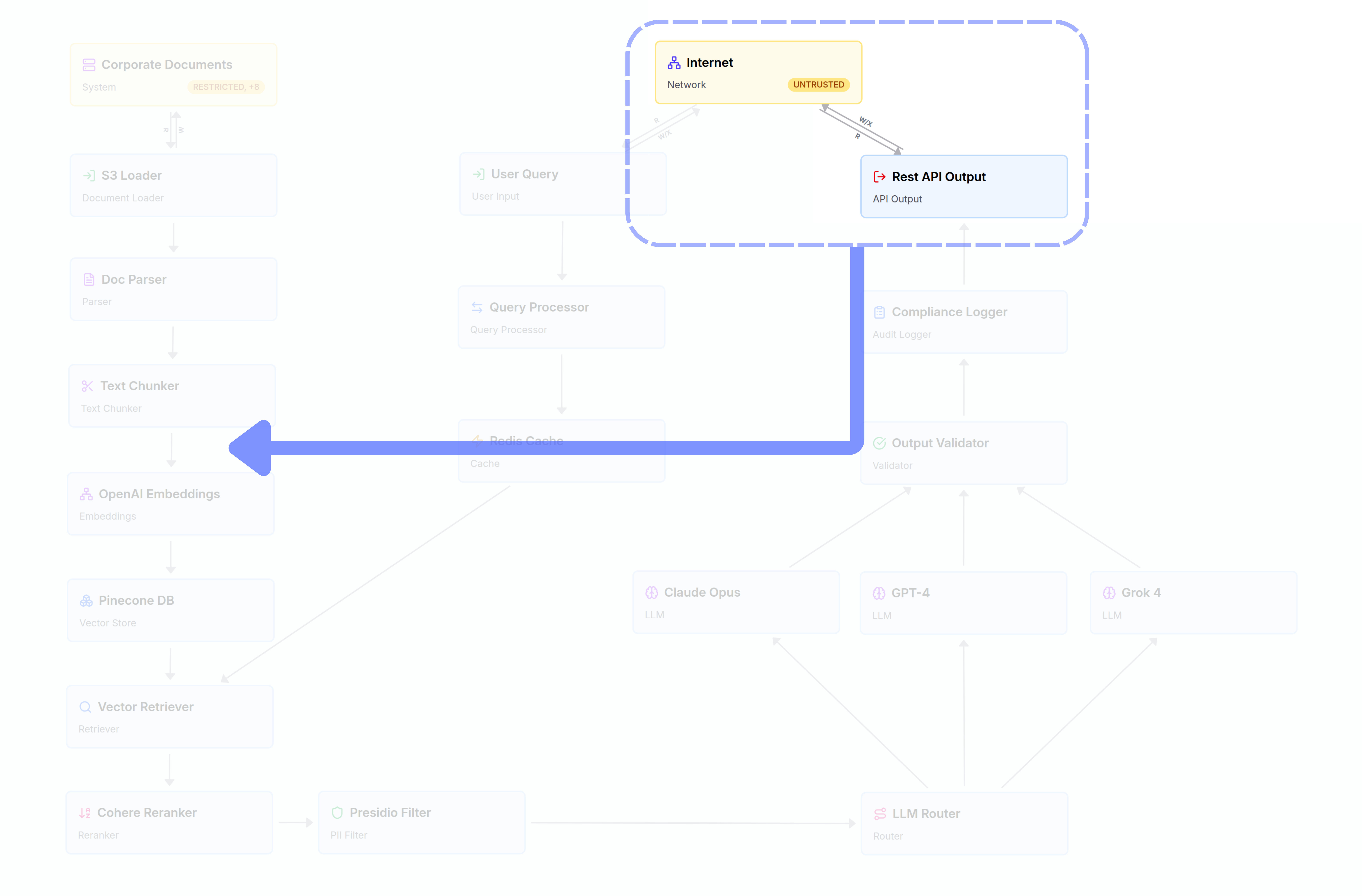

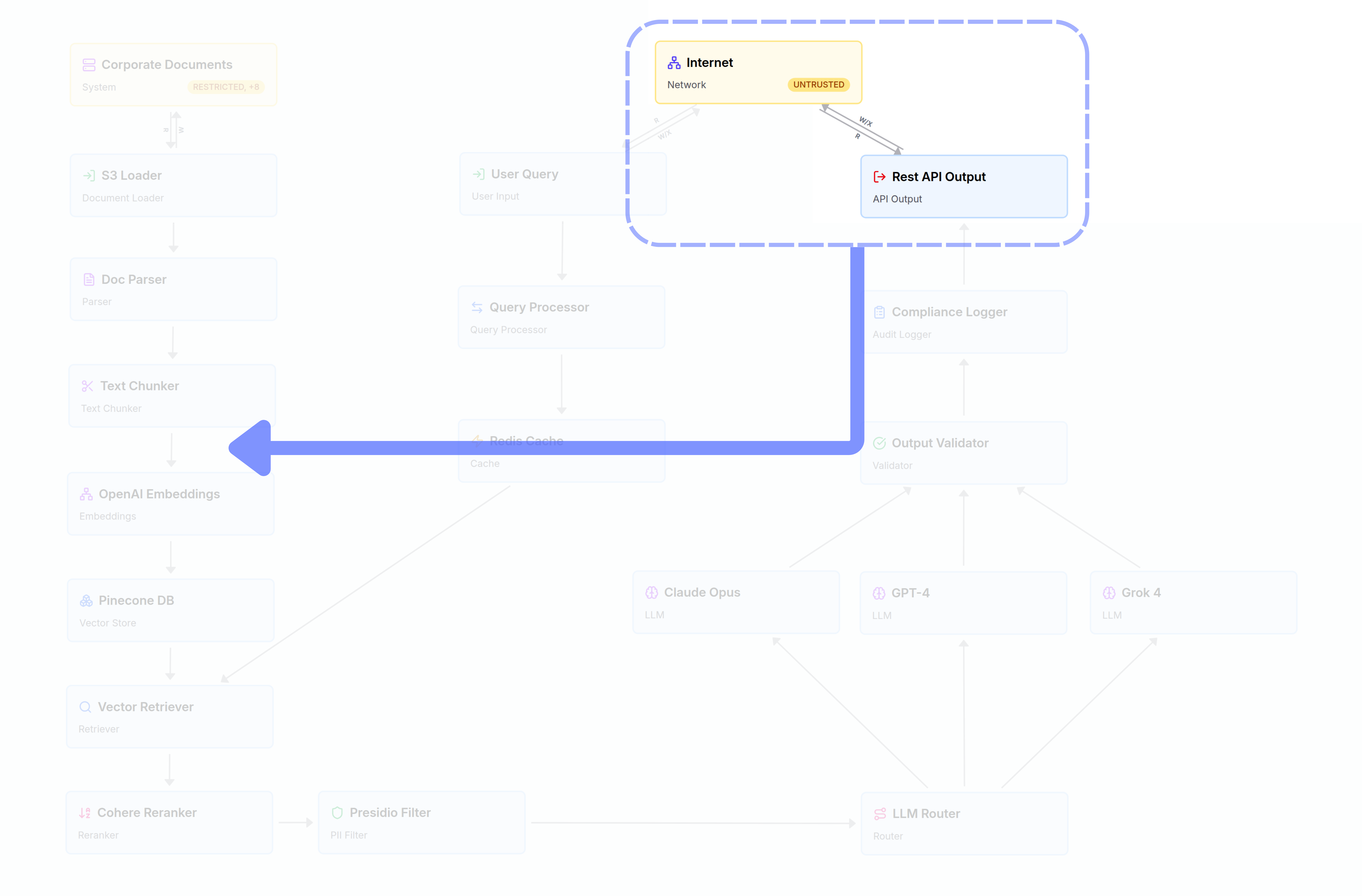

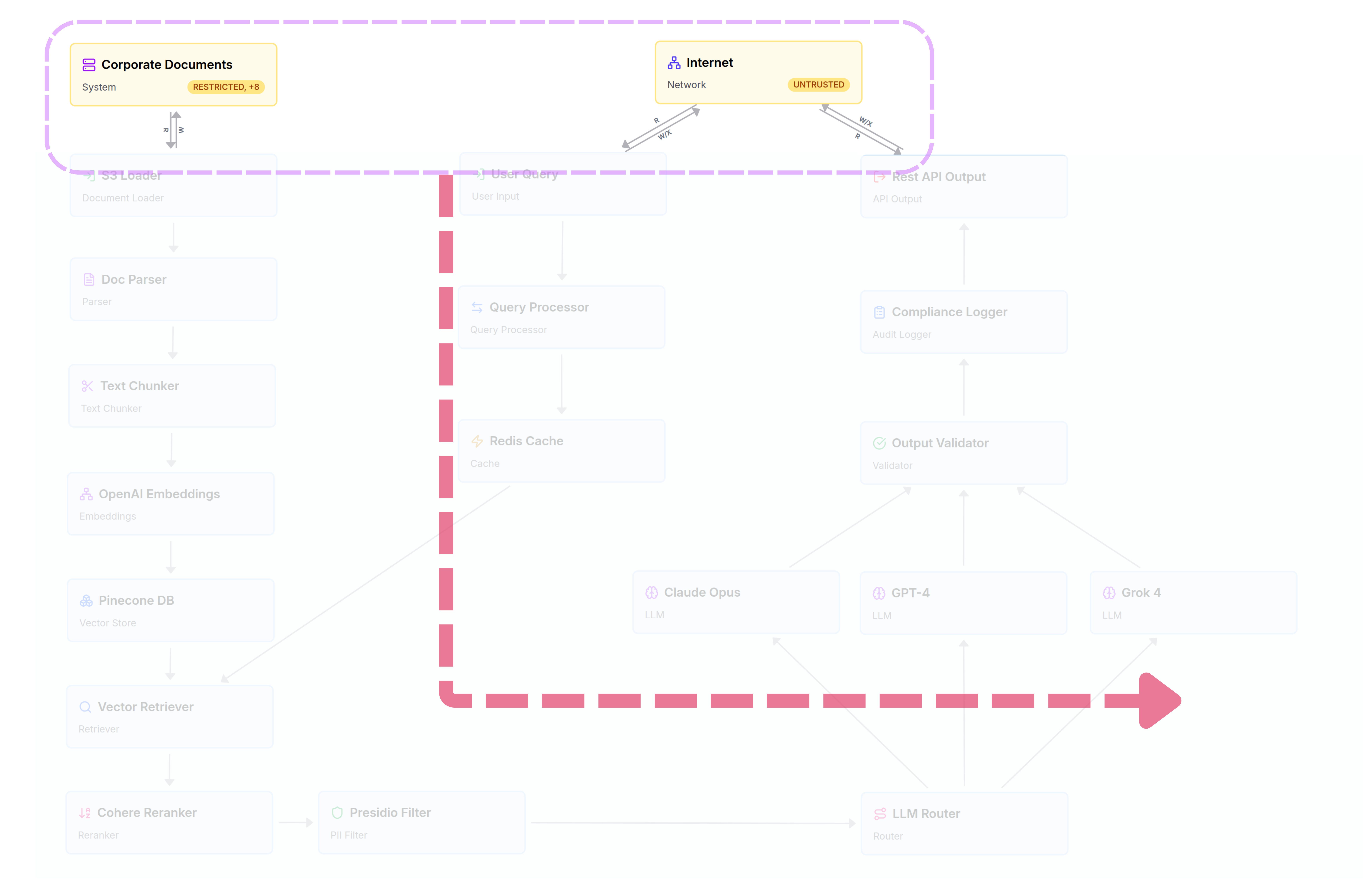

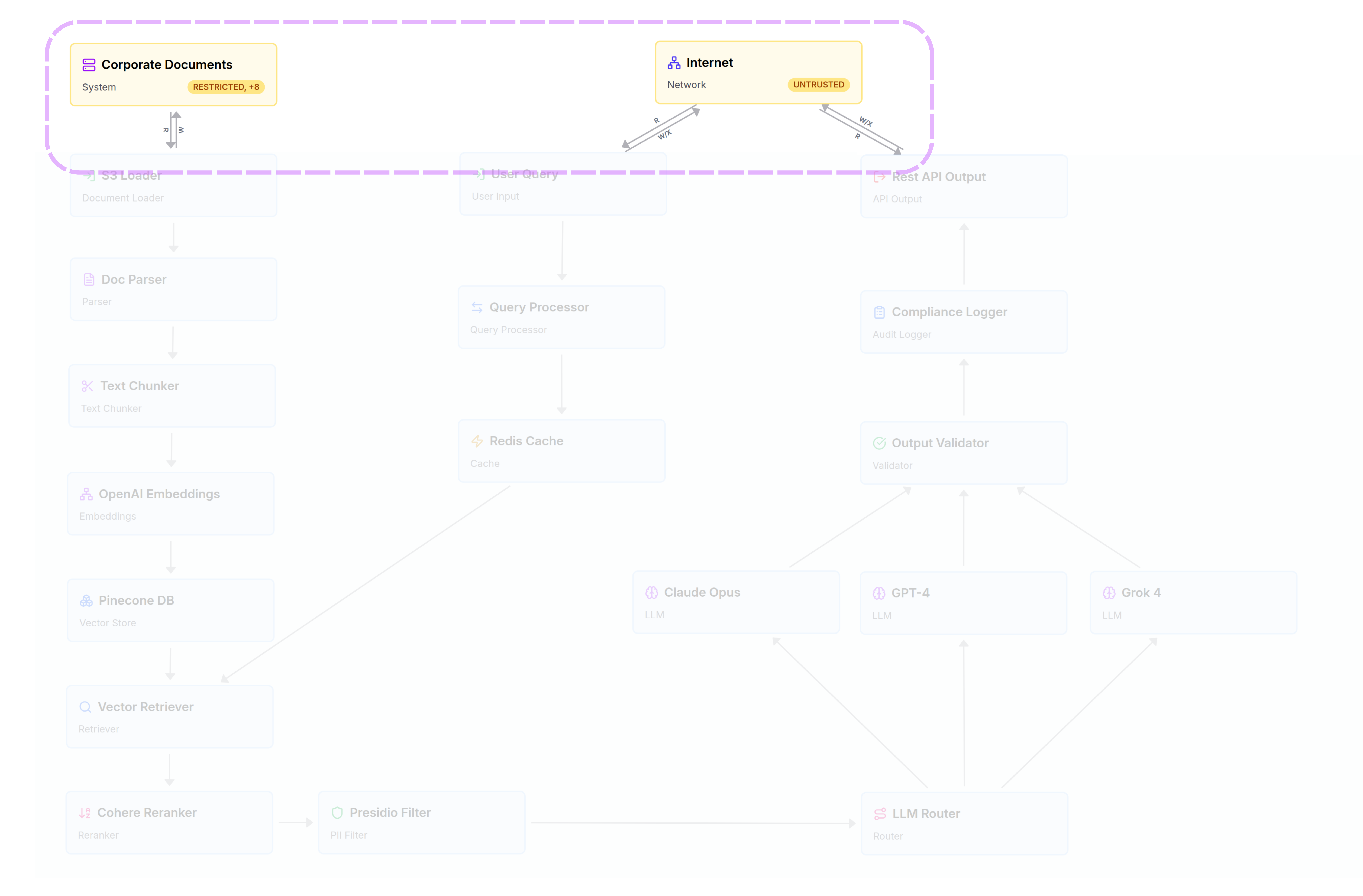

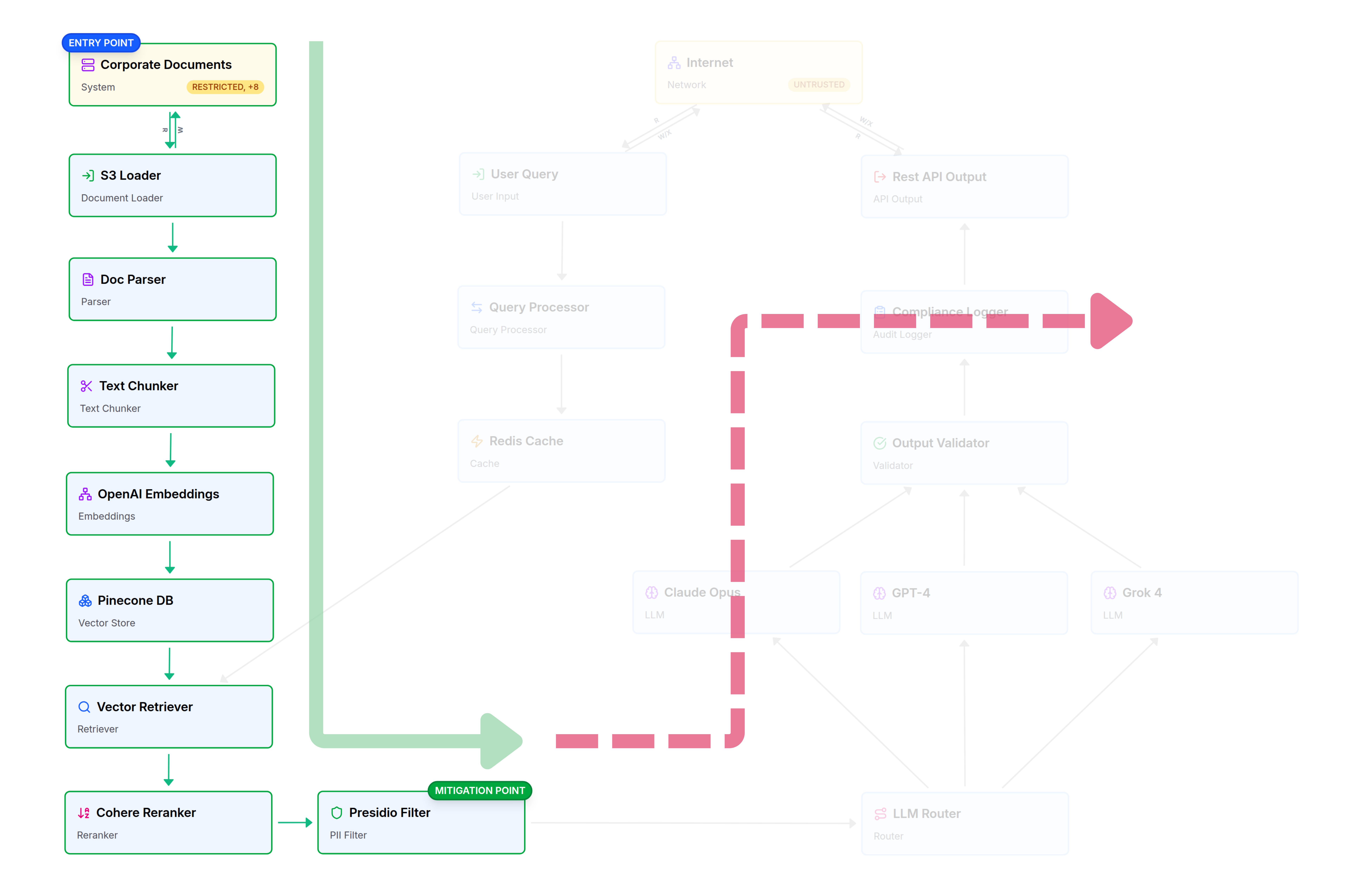

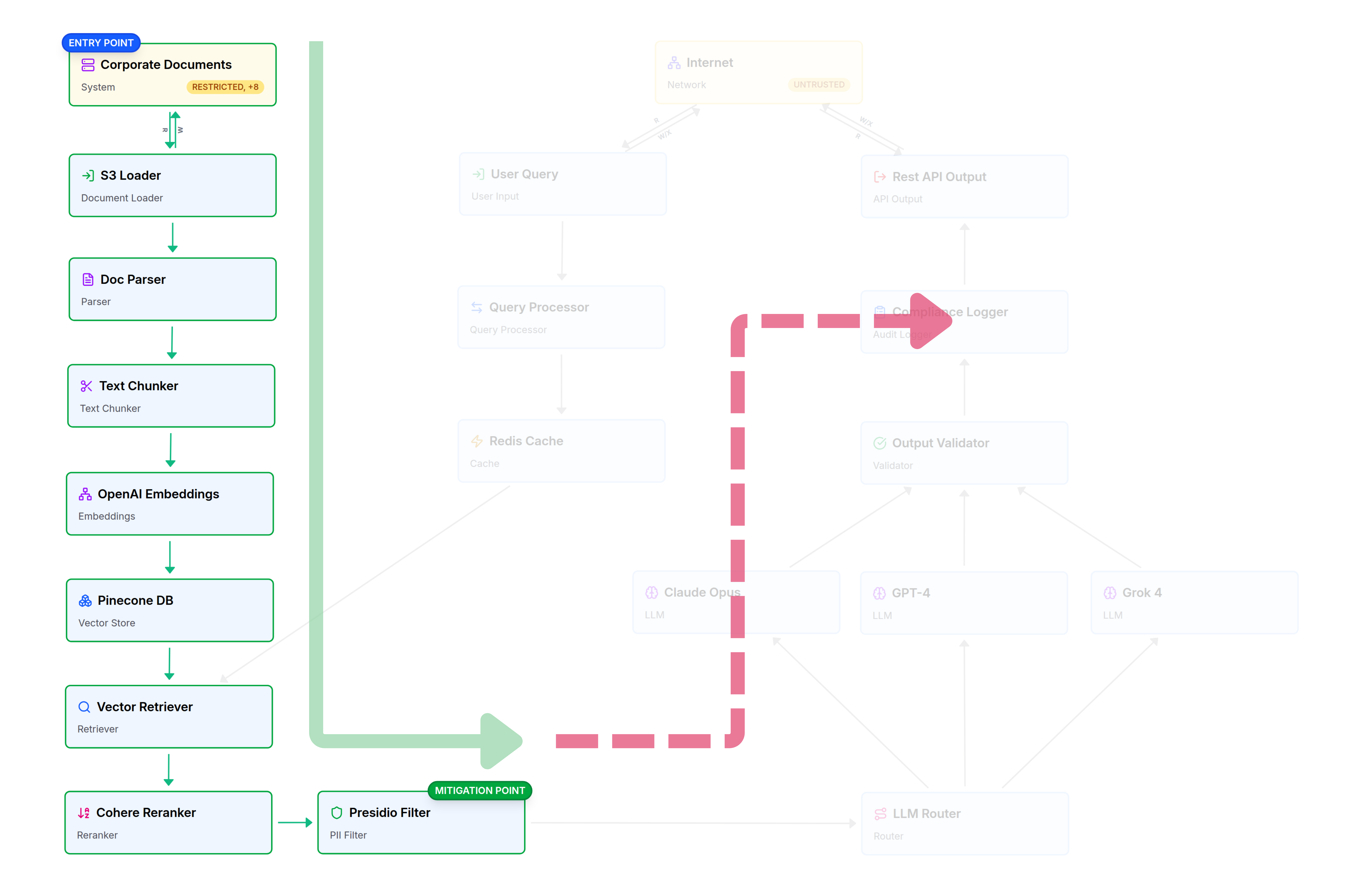

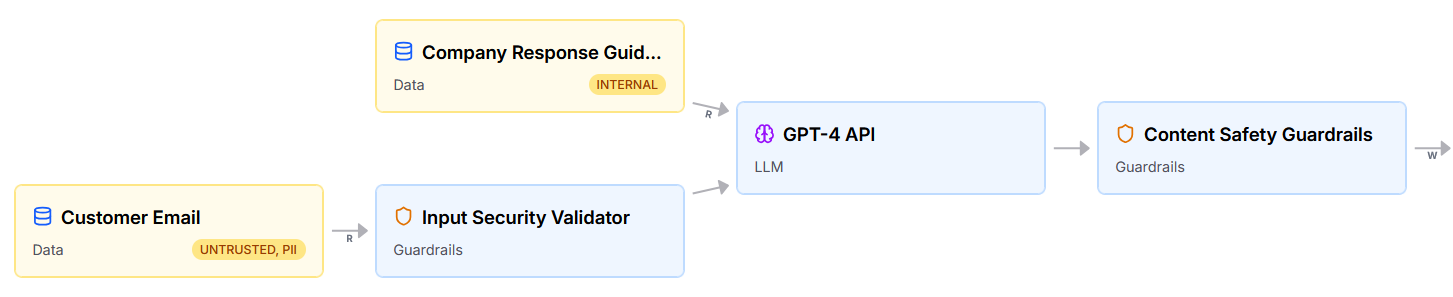

How we see your systems:

One shared map of components, permissions, and data flow — generated automatically.

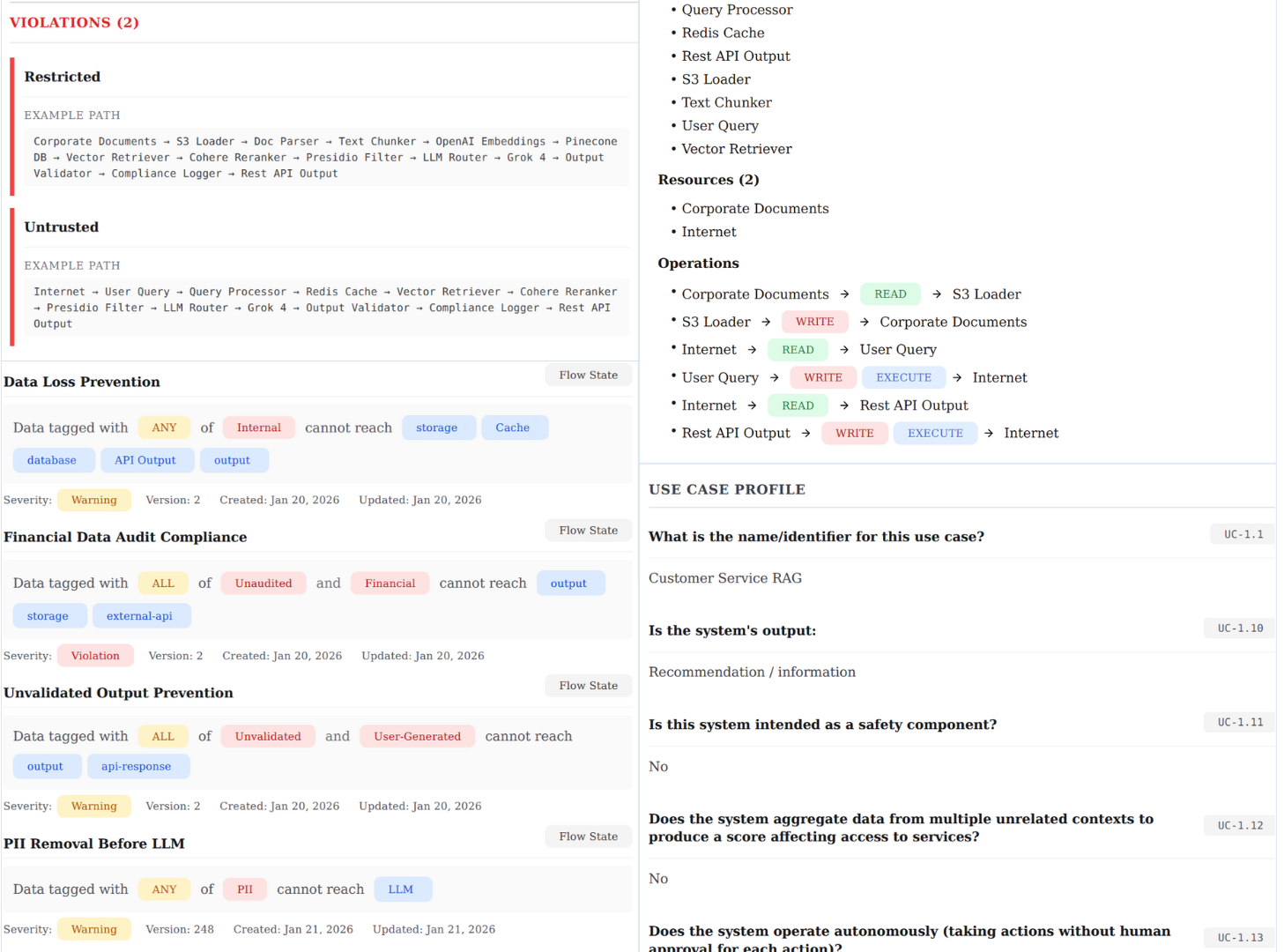

Components

The building blocks of your AI system: models, APIs, guardrails, humans.

Components

The building blocks of your AI system: models, APIs, guardrails, humans.

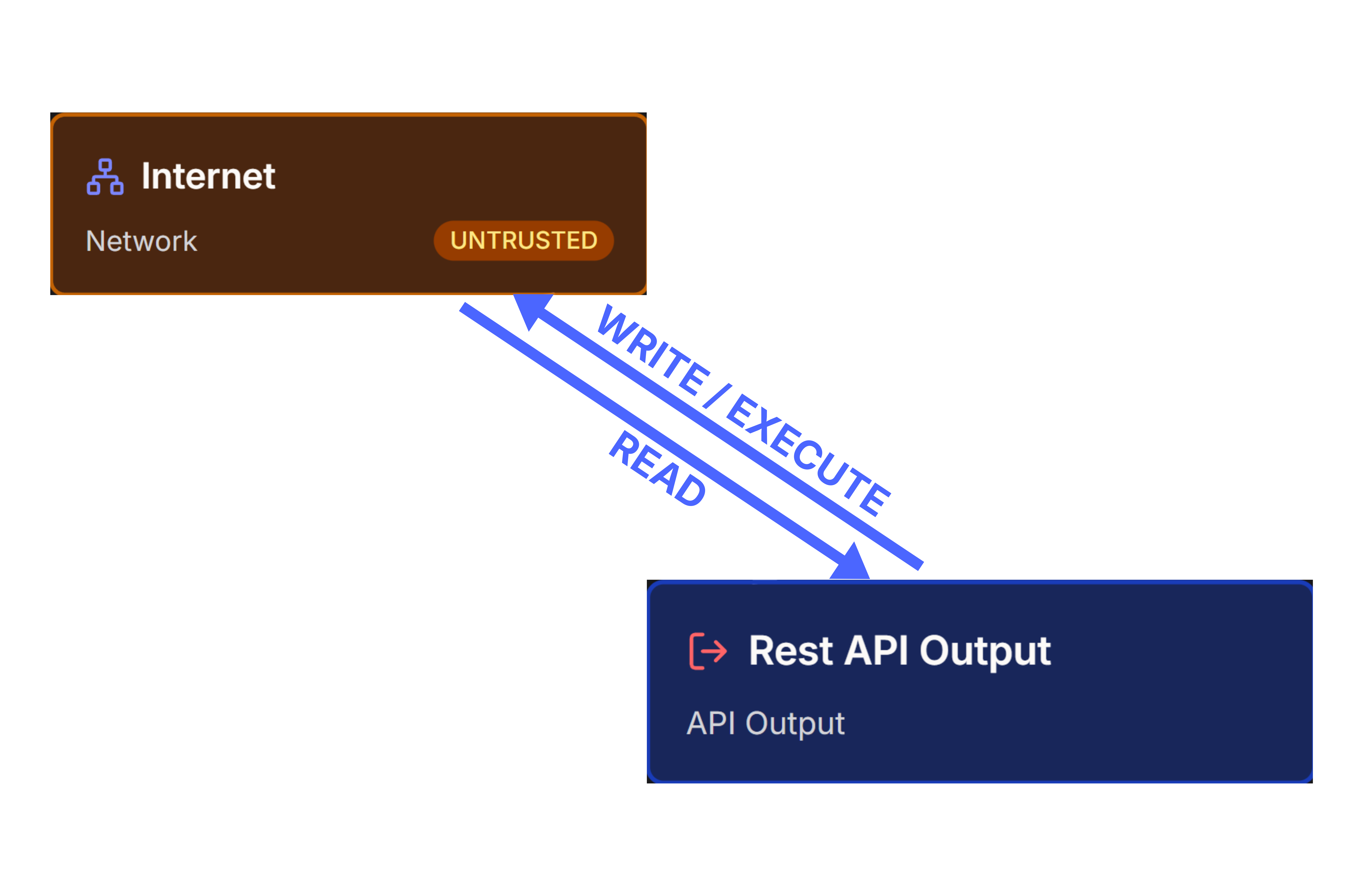

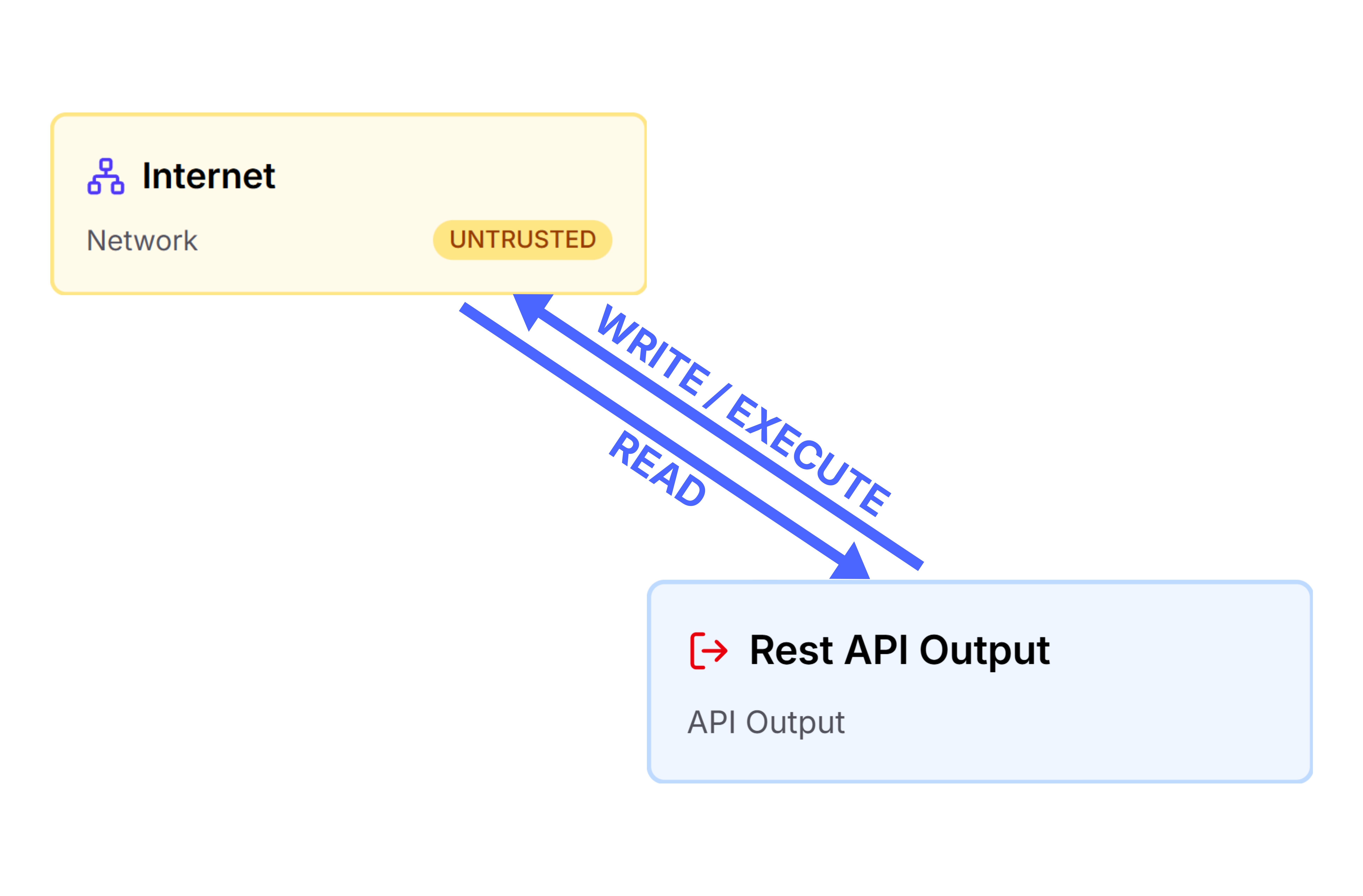

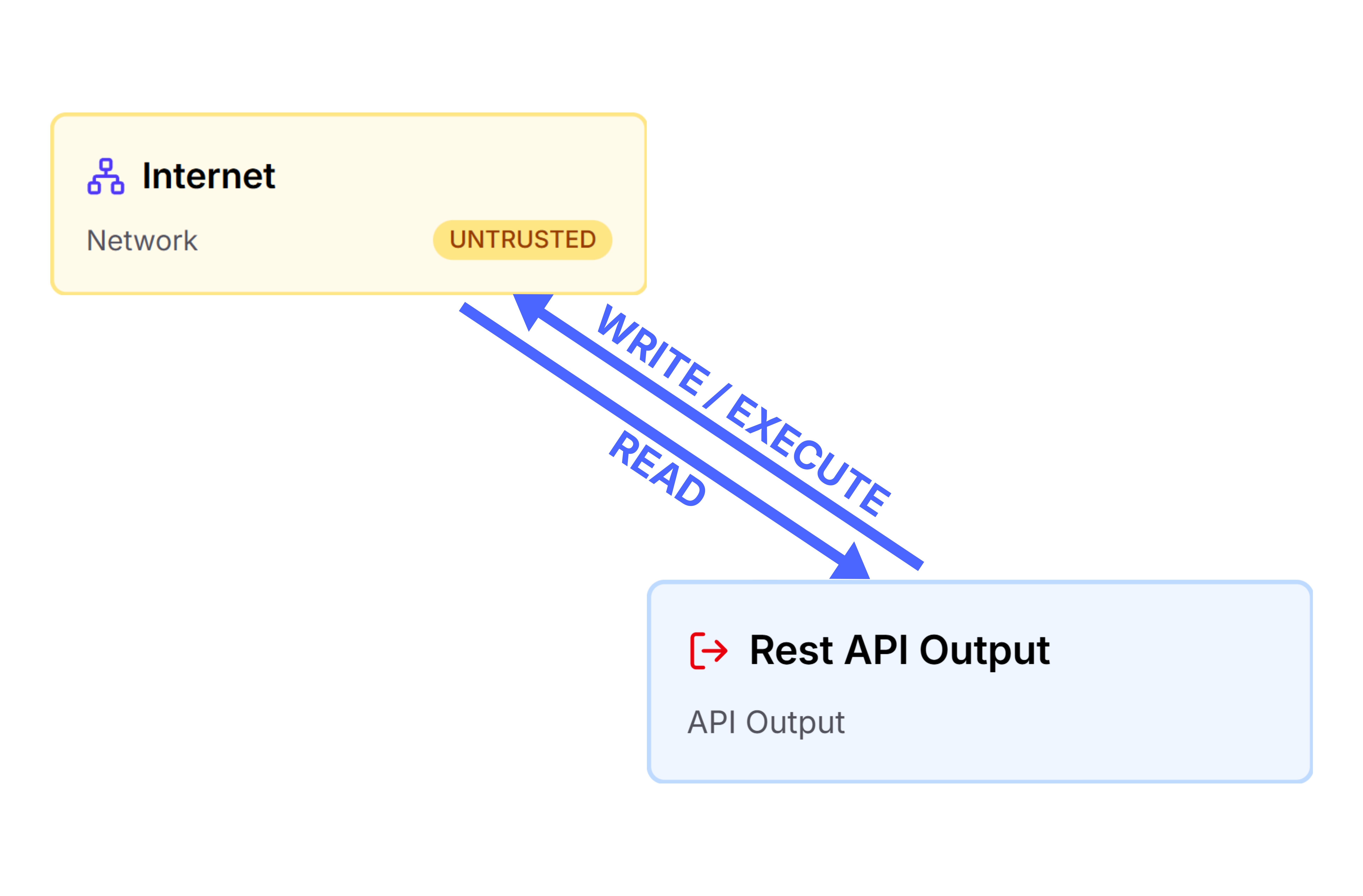

Operations

The actions those components perform on resources—think read, write, execute, access.

Operations

The actions those components perform on resources—think read, write, execute, access.

Resources

The external data and systems your AI can touch. Databases, APIs, email, internet.

Resources

The external data and systems your AI can touch. Databases, APIs, email, internet.

Execution

The real execution paths—how data flows from input to output.

Execution

The real execution paths—how data flows from input to output.

Governance that moves at the speed of your system

Change Diff

Automatic Re-evaluation

Design-time Checks

Every time your system changes—new model, new tool, new permission—Model Monster updates the blueprint automatically.

Risks are re-evaluated against your policies. Evidence stays current. Reviews don't start from scratch.

Catch issues before deployment, not during last-minute reviews.

Governance doesn't have to slow you down—if it's built around change.

What you get on day one:

Immediate visibility into your AI systems with production-ready tools

System Blueprint

-

Components + resources + data flows

-

Full change history

CORE Report

-

Modular reports builder

-

Mapped controls + use case context

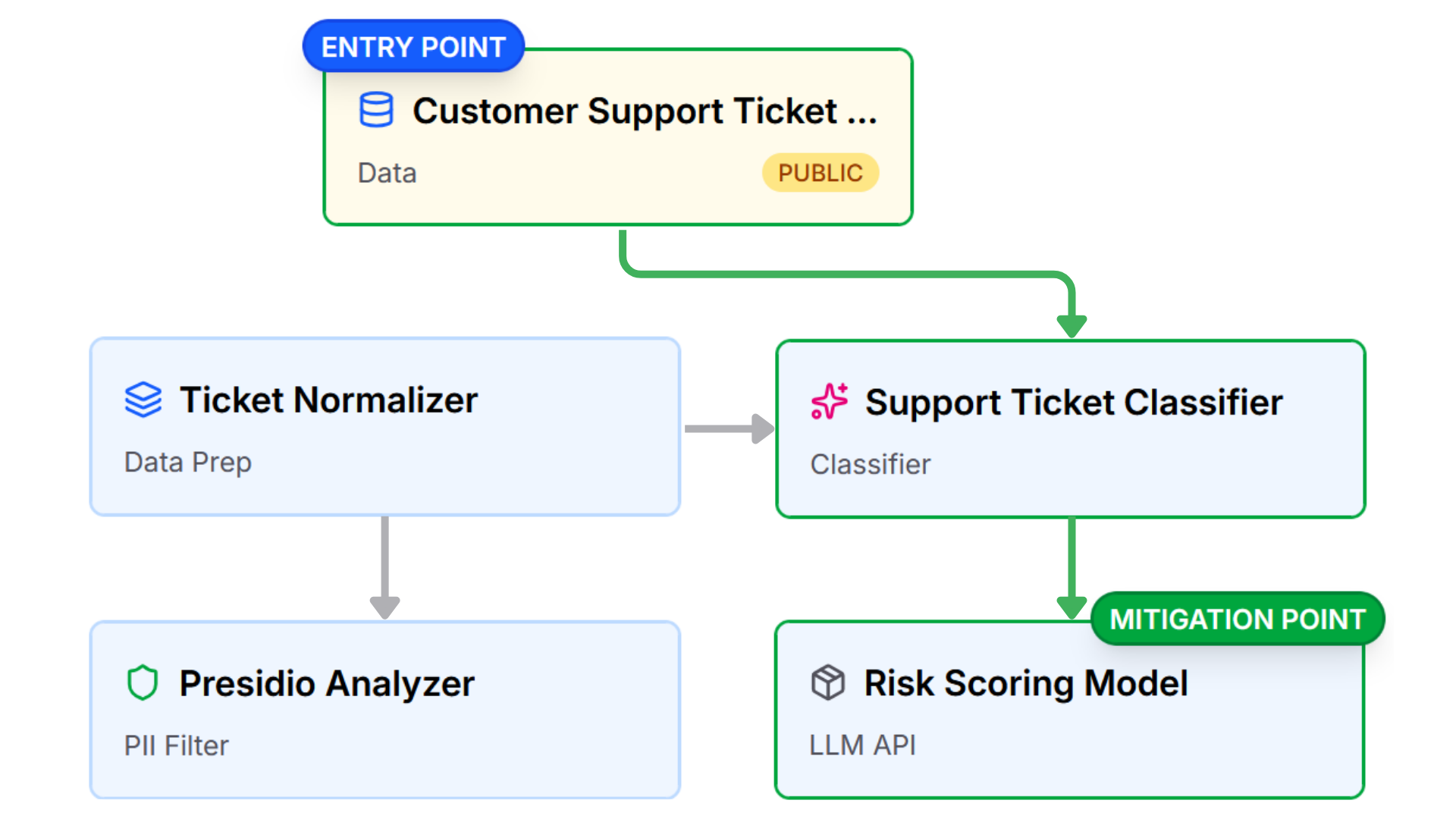

Execution Flows

-

Entry points + warning points

-

Risk pathway visualization

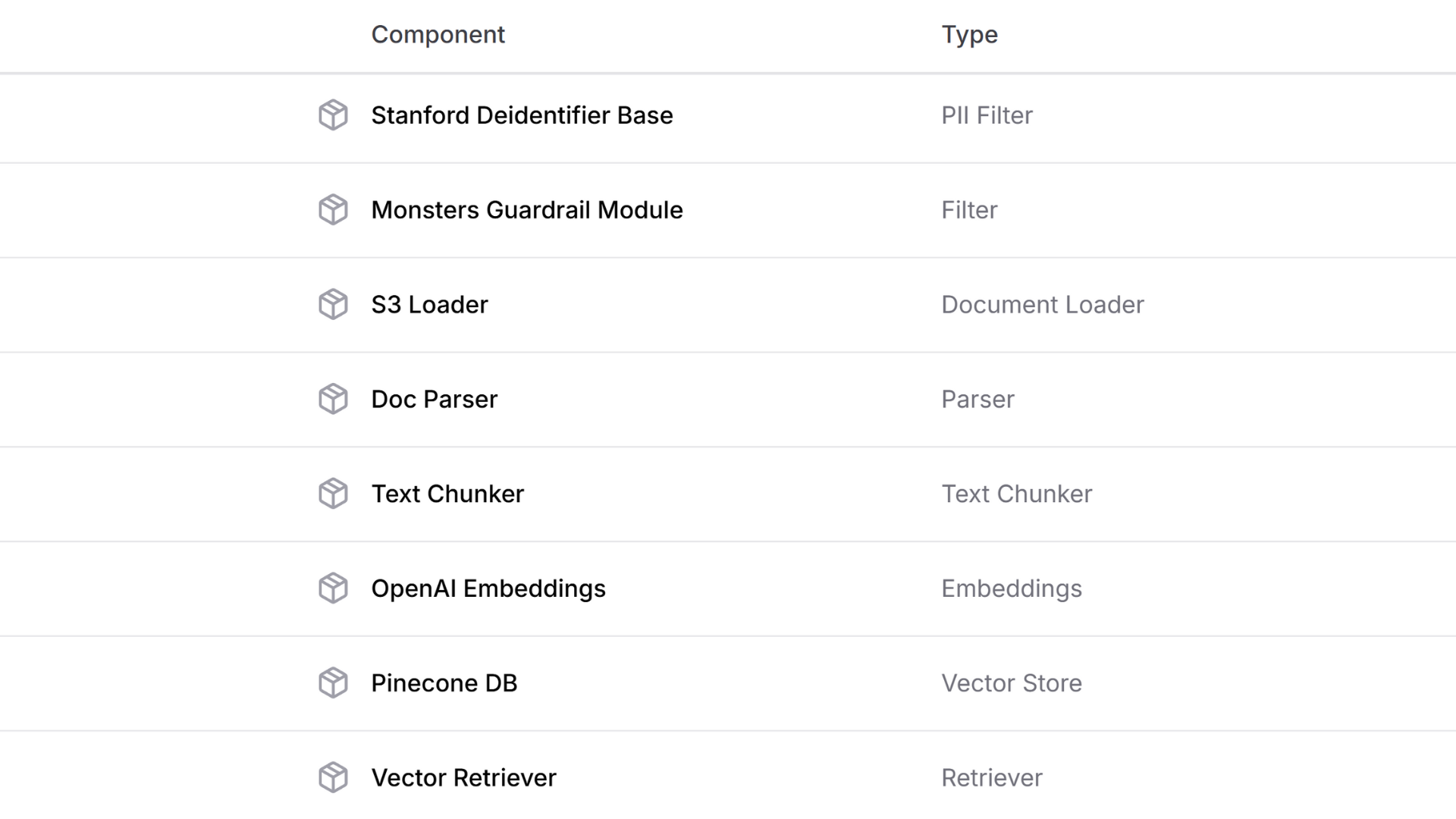

Component Library

-

Thousands of common AI components

-

Simplifies AI Bills of Materials

Built for teams who need real answers

A shared source of truth across legal, compliance, security, and product—without slowing delivery.

Legal & Compliance

Demonstrate due diligence with clear, regulator-ready system documentation. Architecture-level visibility means stronger "reasonable care" arguments.

Risk & Audit

Evaluate policies against real architectures, not assumptions. Clear data access and permissions. Better incident response and audits.

Engineering & Product

Use the systems you already build—no parallel documentation process. Ship faster with fewer last-minute blockers. Shared language across technical and non-technical teams.

Ready to see your AI systems clearly?

Now accepting organizations into our early access program. Work with us to see how Model Monster can accelerate your governance.